Abstract

We present CineVerse, a novel framework for the task of cinematic scene composition. Similar to traditional multishot generation, our task emphasizes the need for consistency and continuity across frames. However, our task also.focuses on addressing challenges inherent to filmmaking, such as multiple characters, complex interactions, and visual cinematic effects. In order to learn to generate such content, we first create the CineVerse dataset. We use this dataset to train our proposed two-stage approach. First, we prompt a large language model (LLM) with task-specific instructions to take in a high-level scene description and generate a detailed plan for the overall setting and charac ters, as well as the individual shots. Then, we fine-tune a text-to-image generation model to synthesize high-quality visual keyframes. Experimental results demonstrate that CineVerse yields promising improvements in generating visually coherent and contextually rich movie scenes, paving the way for further exploration in cinematic video synthesis.

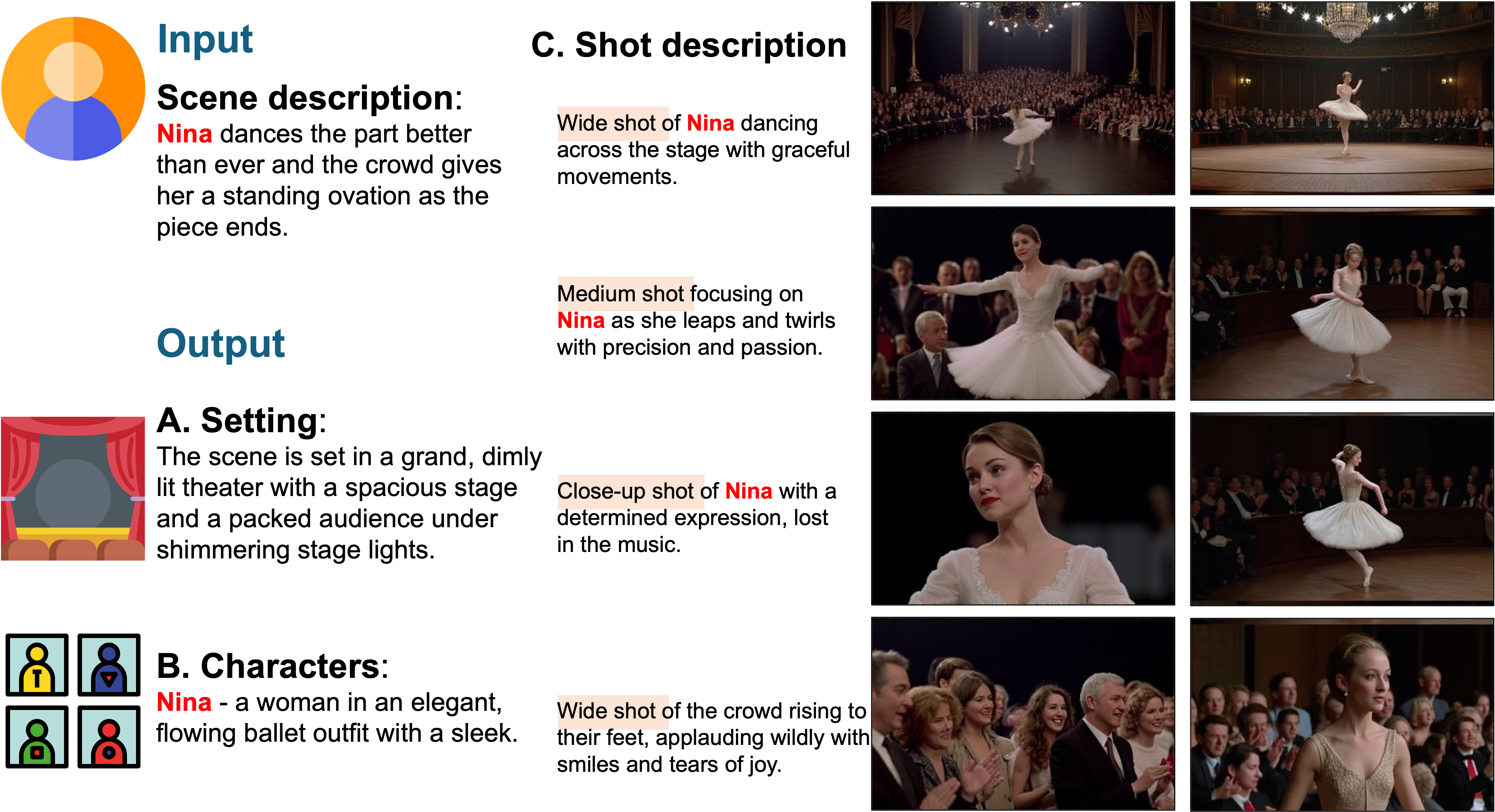

Teaser

Given a simple scene description, we prompt a pre-trained language model to generate the setting, characters with unique appearances, and detailed shot descriptions with explicit shot sizes. We then use this detailed scene plan to synthesize consistent keyframes using our fine-tuned text-to-image model adapted from IC-LoRA specifically for our cinematic scene composition task. Compared to the baseline IC-LoRA, our results showcase improved text-image alignment, consistency, and continuity

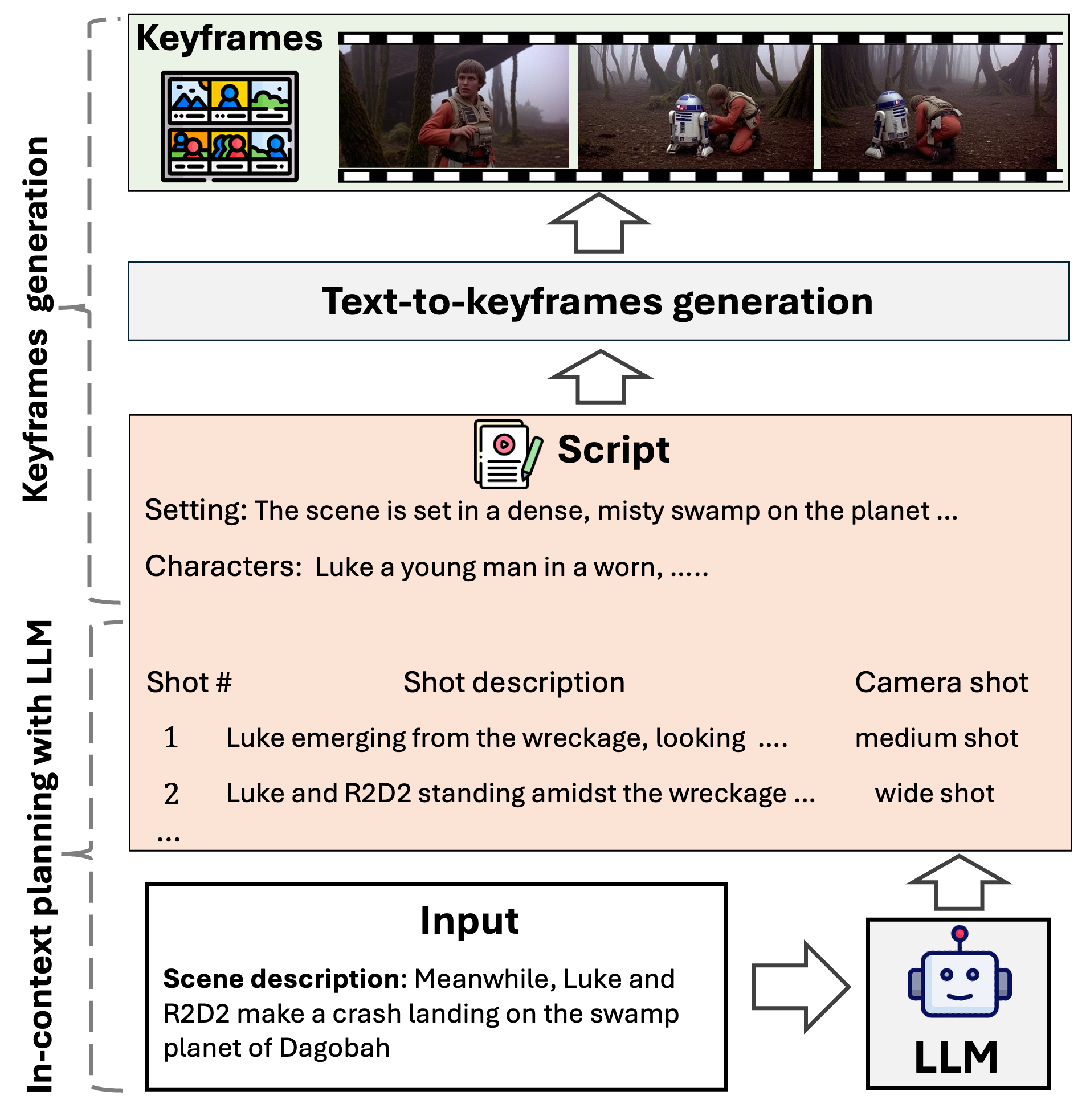

Overview

Method overview. In the stage 1, given the scene description as input, we leverage an LLM for in-context planning to produce a detailed script. This script consists of 1) Setting: A background description of the scene, 2) Characters: Individual characters with their unique appearances, and 3) Shot descriptions: The context and actions of the characters along with specified camera shots. In Stage 2, we use the generated script to synthesize multiple keyframes using text-to-image models fine-tuned on the proposed CineVerse dataset

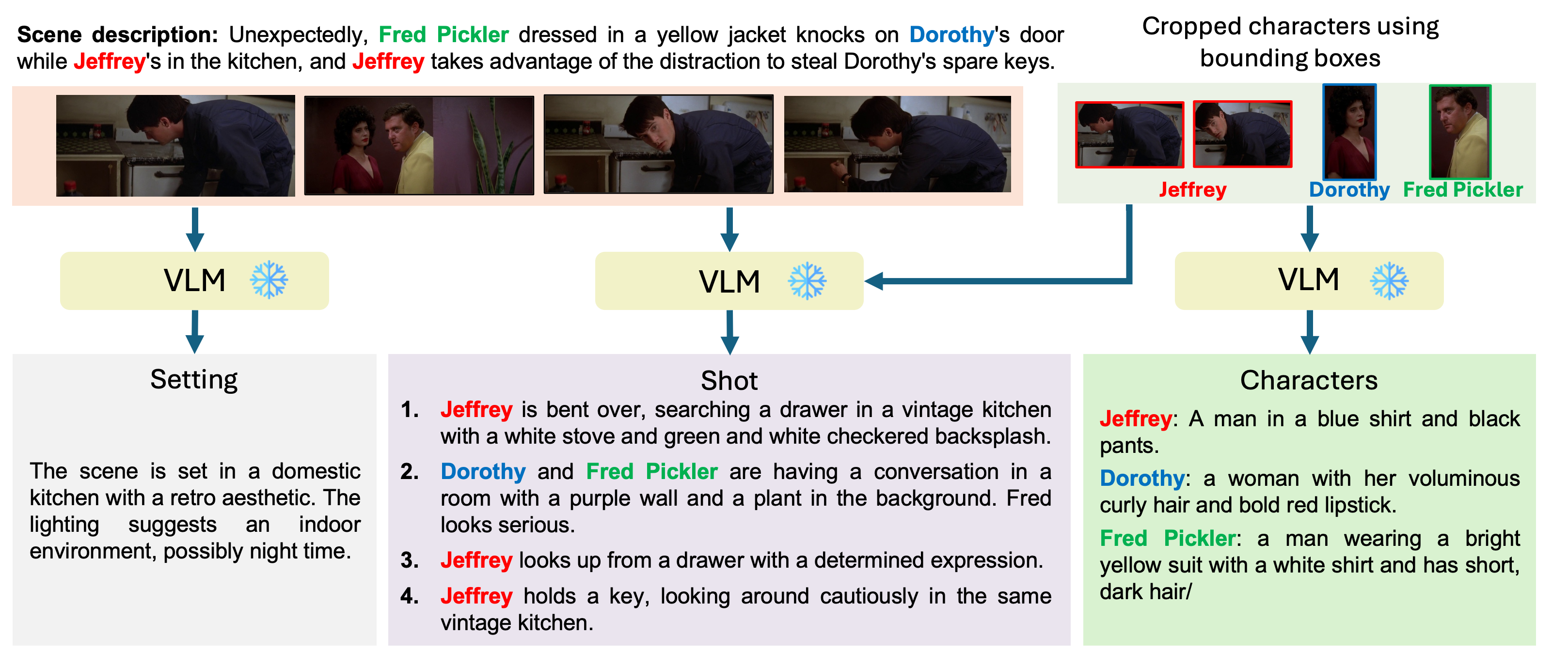

Data processing

We use a pre-trained Vision-Language Model to extract the setting description, shot details, and character appearances.